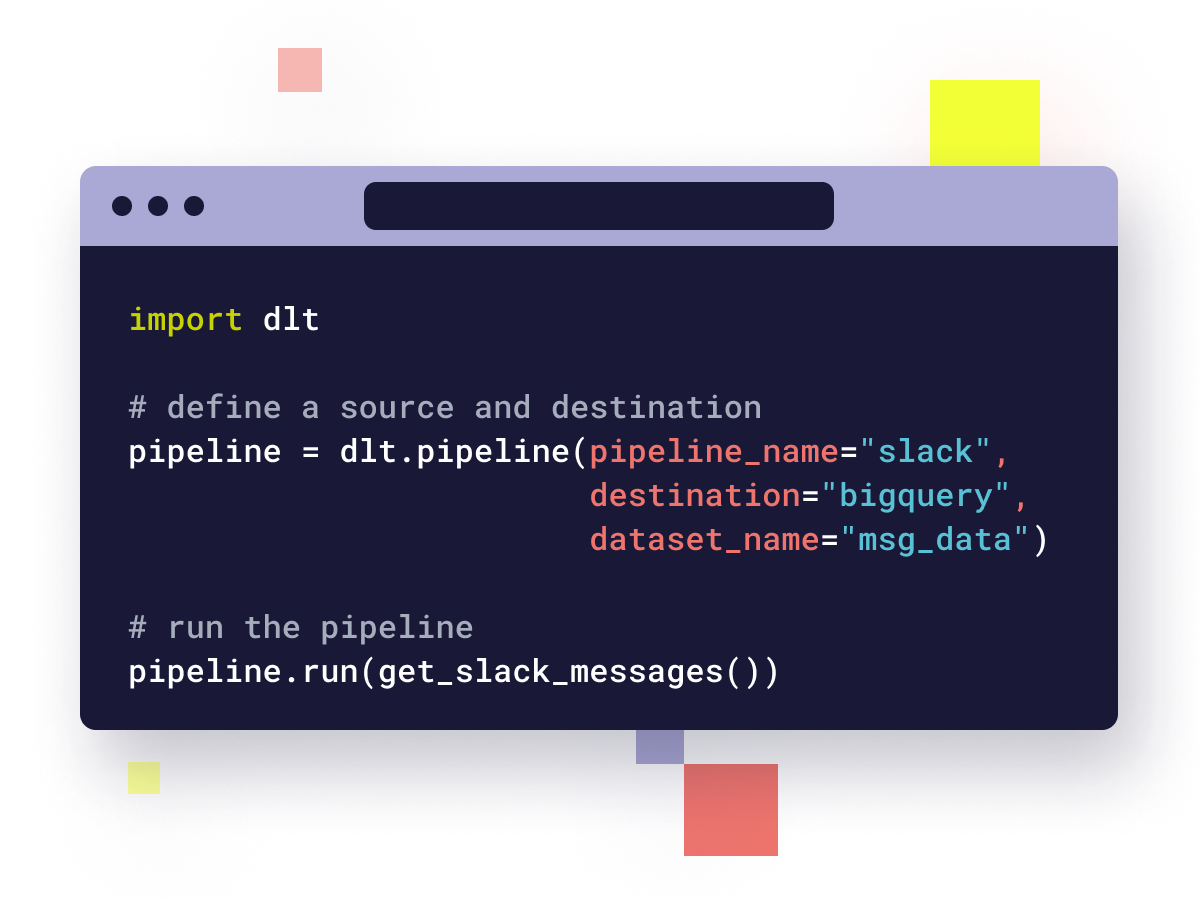

data load tool (dlt)

the python library for data teams loading data in unexpected places

Companies that adopt data democracy for all of their data stakeholders and allow their engineers to build data infrastructure bottom up will see significant revenue and productivity gains - if they provide right the tools to the right people. Be it a Google Colab notebook, an AWS Lambda function, an Airflow DAG, a local laptop, company data tooling or a GPT-4 assisted development playground — the open-source dlt library can be dropped into any workflow in the modern organisation to fit the needs of a new wave of Python developers.

dlt ecosystem

dlt runs where Python works and fits where the data flows

Verified sources

Our verified sources are the simplest way to get started with building your stack. They are well tested - daily!

We have high-quailty customisations to increase usability, such as automatic column naming and properly parsing the data types.

And, of course, we have lots of popular sources, such as SQL, Google Sheets, Zendesk, Stripe, Notion, Hubspot, Github and others. Check out the full list here!

Destinations

Pick one of our high quality destinations and load your data to a local database, warehouse or a data lake. Let dlt infer & evolve the schema. Pass hints to merge, deduplicate, partition or cluster your data.

With this automation, your most tedious work is done.

Instead, you can focus on data testability and quality by using local duckdb or parquet for development. Then easily toggle it to whatever production destination you need - the schema is destination agnostic!

Helpers & integrations

dlt is designed to be a part of an ecosystem and we make it even easier with our helpers.

dlt's integrations enable you to build robust pipelines quickly and go to production event faster. Streamlit, airflow, git actions are natively supported.

Our helpers also cater to many common needs: requests with retries, persistent state dictionaries in python, extraction DAGs, declarative incremental loading, and scalable generators.

our users love what happens when adopting dlt

read more testimonials on our why page

Leveraging dlt has changed our data operations. It has empowered our internal stakeholders, including product, business, and operation teams, to independently satisfy a majority of their data needs through self-service. This shift in responsibility has accelerated the pace of our DataOps team: we spend less time on the EL and more on the T whilst still being able to deeply customize our extractors as business requirements evolve. I chose dlt because I immediately saw the value in its core proposition. The most manual, error prone process of a data engineer's job is two-fold: It's managing evolving schemas and loading data to a destination consistently from some in-memory data structures (lists, dicts). dlt has enabled me to completely rewrite all of our core SaaS service pipelines in 2 weeks and have data pipelines in production with full confidence they will never break due to changing schemas. Furthermore, we completely removed reliance on disparate external, unreliable Singer components whilst maintaining a very light code footprint.

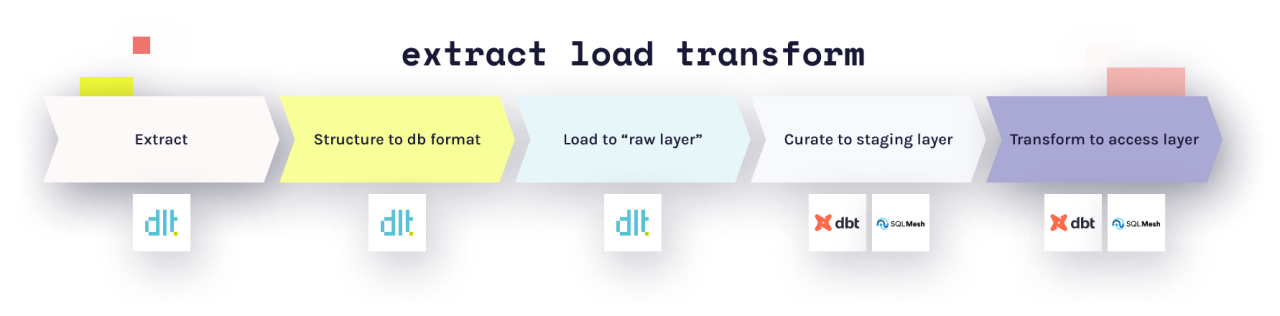

supercharge your sql transformation pipelines with dlt

- Fuel your sql pipelines with data, and become autonomous of vendors or engineers

- Improve your data quality with dlt's schema evolution alerts and rich metadata that enables incremental testing.

- easily go between local test and online production by toggling the dlt destination

- Take advantage of dlt's native integrations with airflow, dbt and various features like versioning.

DLT + DBT can be a killer combination for customers. DLT is built on Python libraries and compatible with sources which support API and functions that can return data in JSON etc. format. It currently supports schema inferences, declarative in nature, easy to install and can be dockerized. Given it's built on Python libraries, the near future looks very promising by incorporating capabilities in EL space for end-to-end governance - support for data contracts, data profiling, data quality, data observability, incorporate chatgpt-4 to provide co-pilot functionalities.

how to generate a dlt pipeline from the openapi spec

Watch dltHub co-founder Marcin Rudolf as he generates a Pokemon dlt pipeline from the OpenAPI spec and looks in Streamlit at how dlt loaded data the data into DuckDB.